Transformers, the innovative technology behind language models like ChatGPT, are revolutionizing fields beyond chatbots and content generation. What if we could use this powerful architecture to improve the way we invest?

Constantly exploring cutting-edge research in finance and machine learning, Affor Analytics focuses on enhancing investment strategies. The significance of Transformers, introduced in the groundbreaking paper Attention Is All You Need, is profound. Discover how Transformers work, their strengths and weaknesses, and their potential applications in the finance industry.

A brief history of neural networks

The origins of artificial neural networks trace back to the 1940s, inspired by the human brain’s interconnected neurons. These early models were simplistic, laying the groundwork for the sophisticated neural network architectures we see today in machine learning.

Neural networks consist of nodes organized in layers that process and transmit information. Each connection between nodes has a weight, indicating its strength. Activation functions at each layer introduce non-linearity, enabling the network to learn complex patterns. Data is fed forward through the network, and predictions are made based on the output layer. Backpropagation allows the network to adjust the weights based on the difference between predicted and actual outputs, refining the model’s accuracy.

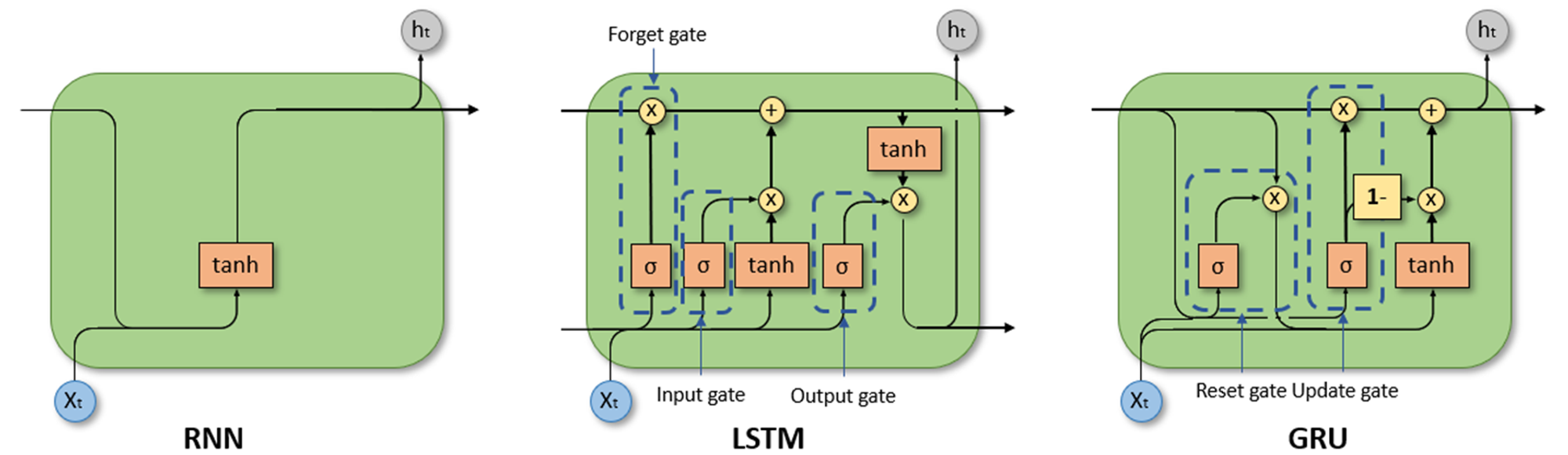

Over the years, many variants of neural networks have been introduced, such as Feed Forward Neural Networks, Convolutional Neural Networks (CNNs), and Radial Basis Function Neural Networks. Recurrent Neural Networks (RNNs) emerged to process sequences of data, with popular architectures including Long Short Term Memory (LSTM) units and Gated Recurrent Units (GRUs).

While RNNs excel at processing sequential data to make predictions, they struggle with long sequences due to vanishing gradients and are highly compute-intensive.

This is where Transformers emerged. Introduced in 2017, Transformers addressed the limitations of RNNs with their self-attention mechanism. Understanding this mechanism reveals the power of Transformers in revolutionizing data processing and prediction.

The power of transformers

Transformers have revolutionized natural language processing (NLP) tasks, significantly outperforming traditional Recurrent Neural Networks (RNNs) in various benchmarks. At the core of this architecture is the concept of “attention.” Attention is a mechanism that allows the model to weigh the importance of different words in a sentence when making predictions.

Essentially, a word’s meaning can be represented by a mathematical vector, an array of numbers. This vector, known as a word embedding, is often consistent regardless of the word’s context within a sentence. However, consider the examples “money in the bank” and “the bank of the river.” In these instances, the word “bank” carries a different meaning, which is not represented by its initial word embeddings.

The Transformer’s innovation lies in its ability to transform these individual word vectors into context-aware representations. This way, “bank” gets assigned a different vector based on the surrounding words, such as “money” and “river.” This ability to focus on relevant information while disregarding noise contributes to the immense popularity and effectiveness of Transformers in machine learning.

How do transformers work?

At the core of the architecture are self-attention mechanisms, which calculate a weighted sum of input elements. The attention mechanism can be expressed as:

Attention(Q, K, V) = softmax((Q * K^T) / sqrt(d_k)) * V,

where Q, K, and V represent the query, key, and value matrices, respectively. The query matrix determines what the model is looking for, the key matrix determines the relevance of each input element, and the value matrix contains the actual information to be processed. The softmax function ensures that the attention weights sum to 1, effectively distributing the model’s focus across the input sequence.

For example, the attention mechanism in a Transformer model would likely assign a higher attention weight to the relationship between “bank” and “river” (or “money”) than between “bank” and “the.” This is because “river” and “money” provide crucial contextual information for disambiguating the meaning of “bank.” In contrast, “the” is a common article that doesn’t significantly contribute to understanding the specific meaning of “bank” in this context and can be considered noise.

The scaling factor 1 / sqrt(d_k) is introduced to prevent the dot product Q * K^T from becoming too large, which could lead to instability during training. The output of the attention function is a weighted sum of the value matrix, where the weights are determined by the relevance of each input element to the query. This way, the Transformer model effectively learns which parts of the sequence to focus on when making predictions.

Transformers also employ a multi-head attention mechanism, allowing the model to attend to different parts of the input sequence simultaneously. This is achieved by dividing the query, key, and value matrices into multiple heads and computing the attention function for each head independently. The outputs of the different heads are then concatenated and linearly transformed to produce the final output.

Finally, Transformers use feedforward neural networks to further process the output of the attention mechanism. These networks consist of multiple layers of linear transformations and non-linear activation functions. The final output of the Transformer is a sequence of vectors, where each vector represents the model’s understanding of a specific input element, contextualizing words like “bank” within the rest of the sentence.

Applying transformers in finance

In the financial world, our focus shifts from words and sentences to time series data, such as stock prices and financial reports. However, it is perfectly possible to use the attention mechanism in finance as well.

Imagine representing a single trading day as a vector, analogous to a word in a sentence. This vector could encompass various features like prices, financial ratios, sentiment scores, or other relevant data points for all companies within our investment universe. By aggregating these vectors across multiple dates, we create a sequence that serves as input for the Transformer.

Just as the Transformer learns to understand the meaning of words within a sentence, it can also learn to interpret these feature vectors in the context of time. By identifying patterns and correlations within this sequence, the model can make informed predictions about stock price movements. The attention mechanism allows the Transformer to pinpoint which specific features and dates are most influential for a given prediction, similar to how it identifies key words in a sentence.

For instance, the Transformer might learn that a combination of Apple’s price-to-book ratio on a certain date and Tesla’s opening price on another date could signal a subsequent increase in Microsoft’s stock price. This capability to discern intricate patterns within financial market data empowers the Transformer to make effective investment decisions.

Challenges and considerations

While transformers in finance hold immense promise, they are not without their shortcomings. One limitation is their computational cost. Training large Transformer models requires significant computational resources, which can be a barrier for smaller firms. However, ongoing research is focused on developing more efficient training algorithms to address this issue.

Another challenge is the need for large amounts of labeled training data. While Transformers can learn from unlabeled data, their performance improves significantly with access to labeled examples. In finance, obtaining high-quality labeled data can be expensive and time-consuming. One way to address this is by aggregating data from multiple sources, a process that our proprietary Ticker Mapping algorithms excel at. This approach allows us to leverage diverse datasets and improve our models’ learning potential and accuracy in quantitative trading strategies and financial data analysis.

Looking ahead: the future of transformers in finance

Despite these challenges, the future of Transformers in Finance looks bright. As research progresses, we can expect to see more efficient training algorithms, improved model architectures, and novel applications in various financial domains. At Affor Analytics, the exploration of Transformers aims to revolutionize investment strategies and deliver superior returns for our clients.

To conclude, Transformers represent a significant advancement in NLP and machine learning. Their ability to capture complex relationships in data makes them a powerful tool for the finance industry. By embracing technologies like Transformers and investing in research and development, we can unlock new insights, enhance our decision-making processes, and ultimately achieve greater success in the ever-competitive world of finance.To learn more about Transformers, we highly recommend these articles:

- Transformers visually explained: part 1 and part 2.

- The GPT-3 Architecture, on a Napkin

- How Transformers Work: A Detailed Exploration of Transformer Architecture

Related Posts

16 October 2024

Enhancing Equity Strategies with Affor Analytics Trading Signals

This research presents new insights how our signals can be used to enhance…