Using innovative RL algorithms to profitably trade commodity futures

For thousands of years, humans have adapted to complex environments, learning from experiences to navigate the world around them. Imagine applying that same learning process to financial markets. At Affor Analytics, we’re exploring how Deep Reinforcement Learning (DRL) can be used to analyze and trade commodity futures, helping traders make more informed decisions and build portfolios that can withstand market fluctuations.

Understanding the concept

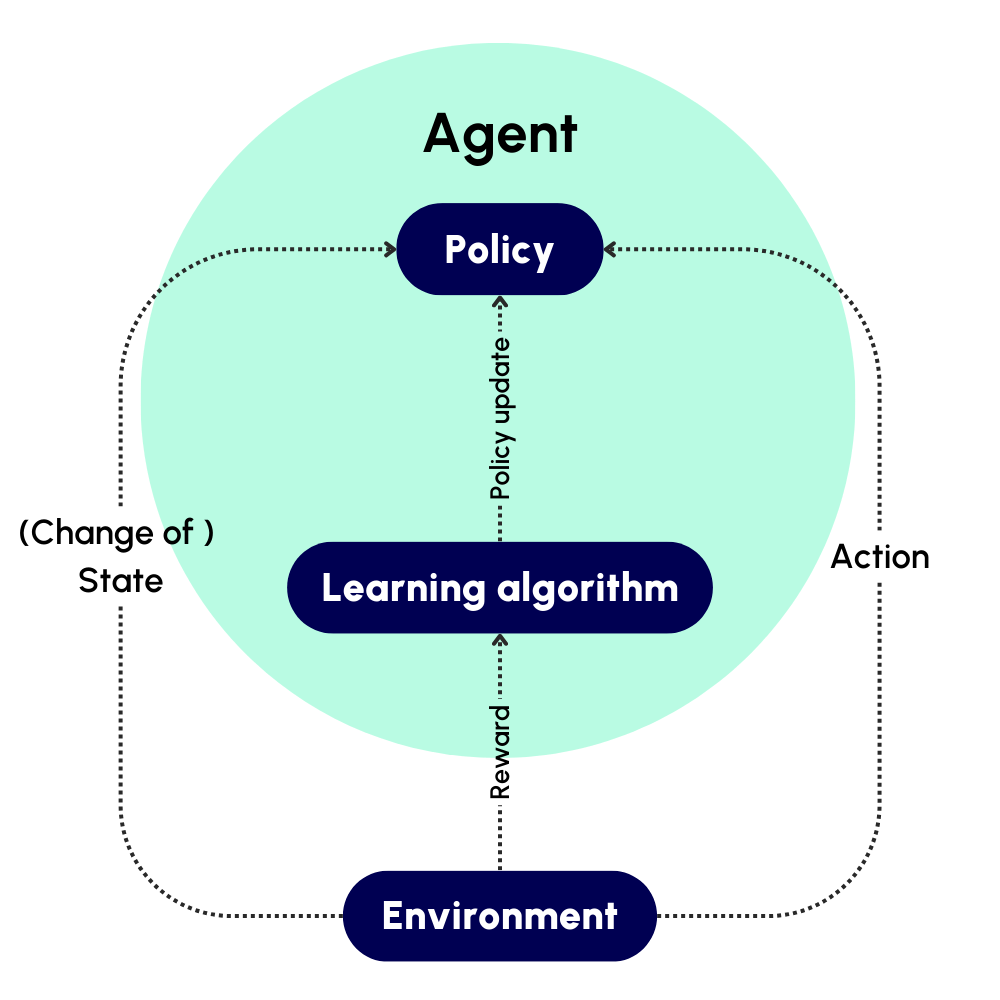

Deep Reinforcement Learning (DRL) is a specialized area of machine learning where algorithms learn by interacting with their environment and receiving feedback based on the outcomes of their actions. It’s similar to how we learn from experiences; by understanding what works and what doesn’t.

In the early stages, reinforcement learning helped computers successfully play games like chess and Pacman. Using DRL concepts, AlphaZero is the best chess player in the world today. It has learned what the best moves are in almost every conceivable board state by playing millions of chess games against itself.

In essence, DRL works by learning a policy (the best action in the current state of the environment) based on the efficacy of its actions (i.e. the current and future rewards it gets for taking the action) in a certain state of the environment. For example, moving a pawn (an action) in the current board state (state) might lead to checkmate (big reward) in a few turns. Over time, AlphaZero (the agent) learns what the value is of moving that pawn in that board state.

In finance, DRL algorithms excel by processing vast amounts of data—like price histories, economic indicators, and sentiment analysis—allowing them to develop strategies that continuously evolve and improve over time. This adaptability makes them particularly effective in the unpredictable world of commodity futures, where traditional models might falter. Unlike those models, DRL doesn’t rely on fixed rules; instead, it learns to maximize long-term rewards, whether through better Sharpe ratios, reduced downside risk, or optimized profit and loss (PnL).

The potential of applying DRL in trading

The true power of DRL in trading lies in its ability to learn and adapt. One promising approach involves using a Deep Q-Network (DQN) agent, enhanced with techniques like dueling and double DQN, to develop models that are both accurate and stable.

This approach could involve leveraging Gated Recurrent Units (GRUs) to handle time-series data, capturing intricate patterns in market behavior. By processing inputs like price data, market indicators, and sentiment scores, the model would calculate Q-values, which estimate the potential rewards of different trading actions. The algorithm could then select the action with the highest highest potential reward, guiding trading decisions.

For instance, consider a DRL agent trained to trade gold futures. By analyzing historical prices, market volatility, and sentiment data, the agent could determine whether to buy, sell, or hold. Over time, as the agent interacts with the market, it would refine its strategy, learning from each trade to make smarter decisions in the future.

Beyond trading signals: wider possibilities for DRL

While the initial focus might be on generating trading signals, the potential applications of DRL extend far beyond:

- High-frequency trading: DRL algorithms excel in high-frequency trading because they can process vast amounts of data in real-time and adapt to rapidly changing market environments. Unlike traditional momentum or mean-reversion techniques that assume constant volatility, DRL can adjust to fluctuating market conditions. The paper on Hierarchical Reinforcement Learning (HRL) offers an innovative solution by employing a meta-agent that selects the most suitable agent for the current market state, ensuring optimal decision-making in environments where conditions change within seconds.

- Dynamic portfolio management: DRL’s ability to continuously learn and adapt makes it ideal for dynamic portfolio management. By constantly adjusting portfolios to align with investor goals and reacting to shifting market conditions, DRL ensures that investment strategies remain robust even as market dynamics evolve. This adaptability is a significant advantage over traditional methods that may not account for real-time changes in risk or market sentiment.

- Advanced risk management: The flexibility of DRL allows for the development of sophisticated risk management models that can identify and mitigate potential risks more effectively than traditional approaches. DRL can process and analyze large datasets, recognizing patterns and relationships that might not be immediately apparent, enabling it to anticipate and respond to risks as they emerge.

Steps to get started

If you’re intrigued by the potential of DRL for trading, here are some practical steps to consider:

- Build a strong foundation: Start by gaining a deep understanding of the fundamental concepts of Reinforcement Learning. This theoretical groundwork is essential for implementing DRL algorithms effectively.

- Backtest your strategies: Before deploying any strategy in live markets, rigorously backtest it on historical data. This helps to assess the strategy’s performance, identify potential weaknesses, and ensure that it aligns with your investment goals. Be mindful of common biases such as survivorship bias, forward-looking bias, and data mining. Read more about this in our whitepaper ‘Navigating the world of quantitative investing: a concise guide’.

- Paper trade: Once you’ve backtested your strategy, consider paper trading to simulate real-world conditions without risking actual capital. This allows you to see how the strategy performs in practice and make necessary adjustments before going live.

Benefits of applying DRL in finance

Exploring the use of Deep Reinforcement Learning in finance reveals several key benefits that could set it apart from traditional trading methods:

- Learning from experience: Unlike rule-based systems that follow a fixed set of pre-defined rules, DRL learns from the outcomes of its actions. This means it can adapt and evolve its strategies based on real-time feedback, discovering new, potentially more profitable approaches as it goes.

- Adaptability to market changes: Unlike rule-based systems, DRL algorithms continuously adapt to new market conditions, making them more resilient and capable of evolving alongside market dynamics.

- Customizable reward functions: DRL allows for the tailoring of reward functions to meet specific investment goals. Whether optimizing for risk-adjusted returns, minimizing downside risks, or reducing transaction costs, the reward function can be designed to align with your strategic objectives.

- Insights from large datasets: DRL’s ability to process and analyze vast amounts of data enables it to uncover subtle patterns and relationships that may be missed by traditional methods or human analysis.

- Flexibility in time horizons: DRL naturally balances short-term and long-term goals through its use of discount factors, enabling the optimization of strategies that perform well across varying investment horizons. This flexibility can be particularly advantageous in complex markets like commodity futures.

Leveraging technology to maximize investment success

DRL offers a promising avenue for developing more adaptive and intelligent trading strategies. By continuously learning and evolving, DRL could help traders navigate the complexities of commodity futures markets with greater confidence. As we continue to explore the possibilities of DRL, Affor Analytics remains committed to leveraging cutting-edge technology to push the boundaries of what’s possible in finance.

Interested in how we apply Deep Reinforcement Learning? Contact our expert team today and we’ll get in touch with you!

Related Posts

16 October 2024

Enhancing Equity Strategies with Affor Analytics Trading Signals

This research presents new insights how our signals can be used to enhance…